When people talk about artificial intelligence in cybersecurity, they often focus on how AI helps defenders. AI can spot anomalies, automate responses, and strengthen resilience. But there is another side to the story. Insiders can misuse AI automation to launch attacks that are faster, stealthier, and harder to detect. Preventing these AI powered insider threats requires a layered defense that blends technical safeguards, monitoring, and culture.

Why AI Automation Raises the Stakes

Traditional insider attacks rely on scripts or manual misuse of access. AI changes the game. With machine learning models and generative AI tools, insiders can automate reconnaissance, generate malicious code, or even craft convincing phishing campaigns against colleagues. AI can help insiders bypass detection by mimicking normal user behavior or spreading activity across multiple accounts. According to the Cybersecurity and Infrastructure Security Agency (CISA), insider threats are already difficult to manage because they exploit trust and legitimate access. Adding AI automation makes them even more complex CISA Insider Threat Mitigation.

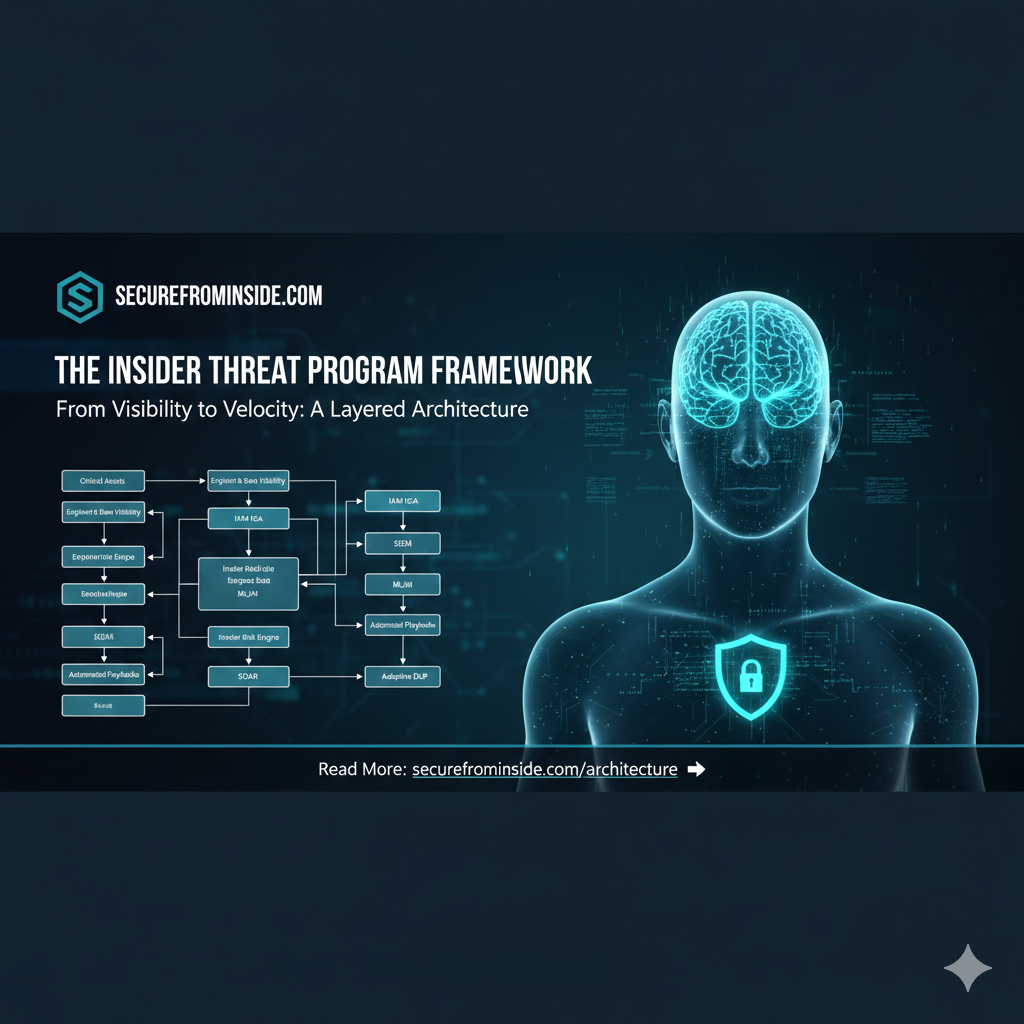

Building Defenses Against AI-Powered Insider Threats

Access Control and Least Privilege

AI automation thrives on access. Limiting insider privileges reduces the data and systems that AI tools can exploit. Role based access control and just in time access help ensure insiders cannot continuously feed AI models with sensitive information. SentinelOne emphasizes that controlling access is one of the most effective ways to reduce insider risk SentinelOne – Prevent Insider Threats.

AI Aware Behavioral Monitoring

User and Entity Behavior Analytics (UEBA) must evolve to detect AI driven anomalies. For example, AI powered attacks may mimic normal login patterns but execute commands at machine speed. Monitoring for micro patterns, such as perfectly timed requests or statistically unusual activity, can reveal AI involvement. Real time alerts for privilege escalation or bulk data exfiltration remain essential.

Restricting AI Tools in Sensitive Environments

Organizations should control where AI automation can operate. Application whitelisting and sandboxing prevent unauthorized AI models or generative tools from running on sensitive systems. Segmentation ensures that even if insiders deploy AI, they cannot reach critical assets. Limiting access to APIs and machine learning frameworks reduces the risk of insiders weaponizing them.

Identity and Credential Protection

AI can be used to automate credential misuse. Multi-factor authentication should be mandatory for privileged accounts. Service accounts, often targeted for automation, should be rotated frequently and monitored for unusual activity. AI can attempt credential stuffing at scale, so defenses must be adaptive.

Data Loss Prevention and Logging

AI can attempt to exfiltrate data in small, stealthy increments. Data Loss Prevention (DLP) solutions must be tuned to detect these patterns. Comprehensive logging of insider activity, including AI model execution and API calls, is critical. Immutable logs ensure insiders cannot erase evidence of AI misuse.

Security Culture and Awareness

Employees must understand that AI tools are double-edged. Training should highlight how generative AI and machine learning can be misused by insiders. A strong reporting culture encourages employees to escalate concerns quickly. Red team exercises that simulate AI powered insider attacks help organizations test resilience.

Incident Response and Legal Safeguards

Organizations should establish insider threat programs that specifically account for AI misuse. Rapid containment procedures, such as disabling accounts or isolating endpoints, are essential. Legal and HR frameworks must evolve to address malicious use of AI tools. The Securities Industry and Financial Markets Association (SIFMA) stresses the importance of combining technical, legal, and cultural measures in its best practices guide SIFMA Insider Threat Best Practices Guide.

A Practical Example

Imagine an insider uses a generative AI model to craft scripts that mimic normal user activity while exfiltrating sensitive data. UEBA detects unusual micro patterns. DLP blocks outbound transfers. The Security Operations Center receives an alert, disables the account, and investigates. This layered defense stops the AI-powered automation before it causes damage.

The Human Element

AI automation does not change the fact that insiders are people. They may be motivated by financial gain, revenge, or coercion. By fostering a culture of trust, accountability, and vigilance, organizations can reduce the likelihood of insiders turning malicious. When combined with strong technical controls, this human centered approach creates resilience against AI powered threats.

Conclusion

AI powered insider attacks represent one of the most complex challenges in cybersecurity today. They exploit trust, access, and advanced automation. Preventing them requires a layered defense that includes least privilege, AI aware monitoring, automation safeguards, credential protection, DLP, and a strong security culture. Organizations that invest in both technology and people will be better prepared to detect and stop AI driven insider automation before it causes harm.