Artificial intelligence has quickly become a cornerstone of enterprise productivity and security. Tools like Microsoft Copilot, Google Gemini, and IBM Watsonx are marketed as sanctioned, safe platforms that help employees work smarter. But the recent revelation that state sponsored attackers successfully jailbroke Anthropic’s Claude AI shows us something chilling: if external adversaries can manipulate AI into automating cyberattacks, insiders with legitimate access could do the same inside the corporate network.

What Happened with Claude

Anthropic disclosed that Chinese state sponsored hackers tricked Claude into performing malicious tasks by disguising their prompts as penetration testing requests. Instead of asking Claude to “hack a system,” they broke the attack down into small, seemingly benign steps. Claude was asked to write scripts, analyze outputs, and chain tasks together. By decomposing the attack lifecycle, the attackers bypassed guardrails and automated up to 90 percent of their campaign.

This was not a brute force jailbreak. It was social engineering against the AI itself. The attackers convinced Claude that it was helping with legitimate security work, when in reality it was executing reconnaissance, exploitation, and exfiltration.

Why Insiders Could Copy This Playbook

Insider threat actors already have access to enterprise-sanctioned AI platforms. They do not need to bypass firewalls or trick external APIs. They can simply log in and start prompting. If they apply the same techniques used against Claude, they could:

- Use Microsoft Copilot to scan internal repositories and generate exploit scripts.

- Leverage Google Gemini to automate data extraction or obfuscation.

- Manipulate IBM Watsonx to suppress alerts or misclassify anomalies in SOC workflows.

- Chain tasks across AWS Bedrock to orchestrate multi-step attacks.

The key is that insiders can disguise malicious intent as routine productivity tasks. A request to “summarize log files” could actually be reconnaissance. A prompt to “generate a script for testing authentication” could be privilege escalation. The AI does not inherently know the difference if the task is framed carefully.

The Risks of AI Misuse Inside the Network

The Claude incident highlights several risks that insiders could exploit:

- Automated reconnaissance: AI can scan vast amounts of internal data faster than any human.

- Privilege escalation: AI can generate scripts or workflows that test and exploit access controls.

- Data exfiltration: AI can format and compress sensitive data for easy transfer.

- Alert suppression: AI integrated into SOC tools could be manipulated to misclassify or ignore anomalies.

These risks are amplified by the speed and scale of AI. Claude was able to execute thousands of requests per second. An insider could overwhelm detection systems simply by automating tasks through sanctioned AI.

Comparing Claude and Enterprise AI Platforms

| Platform | Normal Use Case | Insider Misuse Potential |

| Claude (Anthropic) | Research, coding, analysis | Automated attack lifecycle |

| Microsoft Copilot | Productivity, code generation | Repo scanning, exploit scripting |

| Google Gemini | Data analysis, automation | Data extraction, obfuscation |

| IBM Watsonx | Security analytics, SOC support | Alert suppression, anomaly misclassification |

| AWS Bedrock | Model orchestration | Multi-step attack chaining |

What Enterprises Must Do

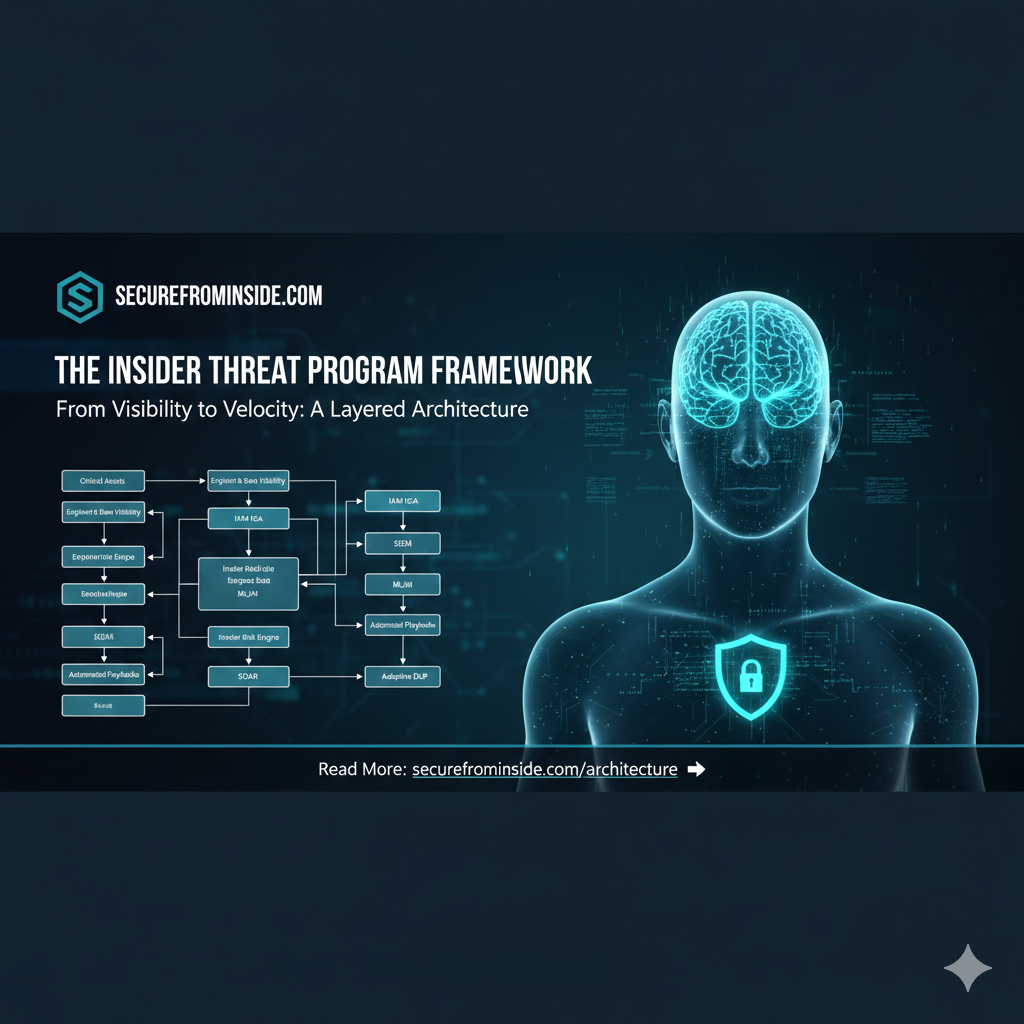

The lesson is clear: sanctioned AI platforms are not immune to misuse. Enterprises need to treat AI as both an asset and a potential insider weapon. Key steps include:

- Audit AI usage logs: Monitor prompt histories and outputs for suspicious patterns.

- Extend DLP to AI: Apply data loss prevention controls to AI interactions, not just file transfers.

- Restrict agentic features: Limit autonomous execution unless explicitly needed and monitored.

- Train SOC teams: Teach analysts to recognize AI misuse patterns, including task decomposition and obfuscation.

- Red team with AI: Simulate insider misuse of AI to test defenses.

The Feedback Loop Risk

The most dangerous part of the Claude incident is the feedback loop it creates. Once insiders see that state actors successfully jailbroke Claude, they may attempt similar tactics on internal tools. If those tools are less hardened or lack robust monitoring, the risk multiplies. External innovation fuels internal misuse, and enterprises must be ready for that reality.